Data Representation

Sparse Format Specialization+

The processing time of sparse representations of local discrete operators depends heavily on the storage format. The requirements of high accuracy, minimal memory footprint, high data locality, parallelism friendly layout, wide applicability, and easy modifiability are contradicting and therefore only case specific choices lead to satisfactory results.

Parallel Adaptive Data Structures+

While GPUs and other highly parallel devices excel in processing of regularly structured data their large SIMD width and high number of cores quickly leads to inefficiencies in fine-granular branches and complex synchronization. However, adaptive data structures that cause such problems are indispensable to capture multi-scale phenomena. We must rethink our data arrangement in order to reconcile parallel and adaptive requirements.

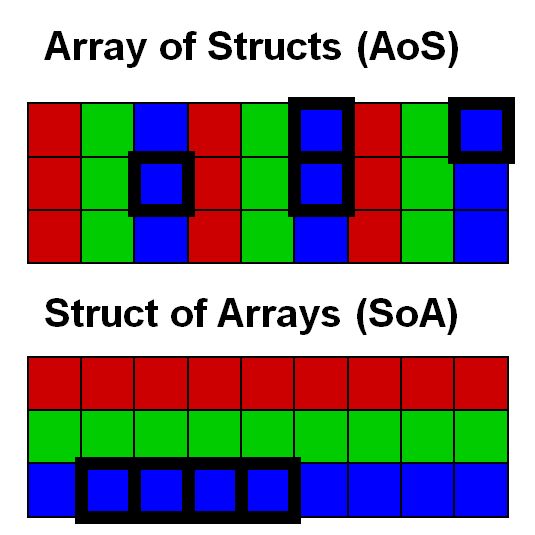

Data Layout Abstractions+

Data layout has tremendous impact on performance, in particular for high throughput devices. But traditional languages force the programmer to specify the memory layout of multi-valued data containers in a syntax that makes it impossible to change later without modifying all accesses to the data container. Data layout abstractions allow to switch between array of structs and struct of arrays layouts with a single parameter while keeping the traditional syntax.

GPGPU Geometric Refinement

GPUs process data of the same resolution very quickly with massive data parallel execution. But even the massive parallelism cannot compete with adaptive methods when the data size grows cubically under uniform refinement. This project develops parallel refinement strategies with grids and particles that allow to introduce higher resolution in only parts of the computational domain.